Handbook on non-discriminating algorithms

Algorithms are used increasingly frequently for risk-based operations and automated decision-making. However, this approach carries a great risk, especially with machine-learning systems, namely, that it is no longer clear how the decision-making takes place. That this can go horribly wrong was shown by the child allowance affair, whereby minority groups were systematically discriminated against by the Dutch Tax and Customs Administration, including through the use of particular algorithms.

Handbook

As part of a study commissioned by the Netherlands Ministry of the Interior and Kingdom Relations, a team of researchers of Tilburg University, Eindhoven University of Technology, Free University of Brussels-VUB, and the Netherlands Institute for Human Rights have composed a handbook explaining step by step how organizations that want to use Artificial Intelligence can prevent using algorithms with a bias. The research team examined technical, legal, and organizational criteria that need to be taken into account. The handbook can be used in both the public and private sectors.

Downloads handbook

The handbook and the underlying research can be downloaded here.

Ten rules

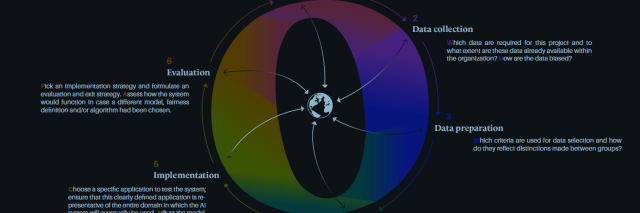

The Handbook gives ten rules to help prevent abuses like the above-mentioned child allowance affair at the Dutch Tax Administration.

-

Involve stakeholders

Stakeholders and groups affected by automated decision-making must be involved in the development of the algorithm from the very start, not at the end of the process. There should be regular evaluation moments with stakeholders during the development process. - Think twice

At the moment, implementing bodies and companies often opt for automated decision-making and a risk-based approach by default, unless there are substantial objections. Since algorithms by definition make decisions based on group characteristics that do not take into account specific circumstances in individual cases, the risk of bias is inherent to the choice to use AI. For that reason, it is essential to first investigate whether the objectives can be achieved without an algorithm. - Pay attention to the context

The use of algorithms make processes model-based; that is efficient and can give more consistent outcomes. However, the rules learned by the algorithm are based on a data reality, and matters regularly become disconnected from the real world and the human measure. Therefore, decision-making processes must involve a person who conducts checks: What is the algorithm doing now and does that actually make sense? The team working on an AI project must therefore be as divers as possible, taking into account both professional and personal backgrounds, such as the ethnicity, gender, orientation, cultural and religious background, and ages of team members. - Check for bias in the data

Algorithms work with data, but the data owned by those organizations are often incomplete and may therefore present a distorted picture. An algorithm that is taught by the existing world will learn that men are more likely than women to be invited to an interview for a managerial position. An algorithm that is trained with a police database containing an overrepresentation of data from neighborhoods with many residents from a migrant background will conclude that crime is particularly prevalent in those neighborhoods. Therefore somebody must always check whether the data are balanced, complete, and up to date. - Define a clear objective

Before the algorithm is developed, success criteria must be identified. What margin of error is acceptable and how does it differ from existing processes? What benefits would the AI system need to have to warrant the investment? A year after the system has become operational, there must be an evaluation of whether these benchmarks have been achieved. If they haven’t, the system should in principle be discontinued - Monitor permanently

Algorithms are often self-learning. This means that they adapt to the context in which they are used. However, it is impossible to predict how such an algorithm will develop, mainly because that context can change as well. Therefore, the system must be permanently monitored. Even if the automated decision-making is not biased when it is first used, this may be different after a year or even as soon as within a month. - Involve external experts

Businesses and government organizations are hardly ever neutral with respect to their own systems. Costs have been incurred, time has been invested, prestige is at stake. For that reason, the evaluation of the system should not be in the hands of the organization itself, but external experts must be involved. This group of external experts must have knowledge of the relevant domain and of the applicable legal rules and technical standards. - Check for indirect bias

Monitoring algorithms should not only be about the question of whether the system makes decisions explicitly based on such discriminatory grounds as race, orientation, religion, or age. Even though automated decision-making systems do not directly take these factors into account, they can still do so indirectly. For instance, if a predictive policing system uses postal code areas, this can lead to indirect discrimination because it may advise the police to have more officers on the beat in the Bijlmer or Schilderswijk areas. The risk of self-fulfilling prophecies looms large.

- Check legitimacy

Not all differentiation is prohibited; even direct or indirect discrimination can in some cases be permitted. An automatic decision-making system that helps a casting agency to select application letters is allowed to discriminate based on sex, for instance, if an actress is recruited. A postal code area or other factors that may lead to indirect discrimination are not prohibited in all cases, depending on the context. Therefore, the system should always be checked, not only for whether it makes a distinction, but also how and why, and whether there are good reasons to do so. - Document everything

Document all decisions relating to data collection, data selection, the development of the algorithm, and any changes made to it. The documentation must be understandable for citizens who want to invoke their rights, for the external experts who carry out the independent audit, and for supervisory authorities, e.g., the Dutch Data Protection Authority, who may want to check the system.