Multimodal Language Learning

Our research is inspired by the ease with which young children pick up any language they are exposed to, sometimes several languages at the same time, seemingly with little effort and practically no explicit instruction.

-

dr. Grzegorz Chrupała

Principal Investigator

The information they rely on is messy and unstructured, but it is rich and multimodal, including speech and gestures, visual and auditory perception and interactions with other people.

In contrast, the typical way computers learn language is only by reading large amounts of words of written text.

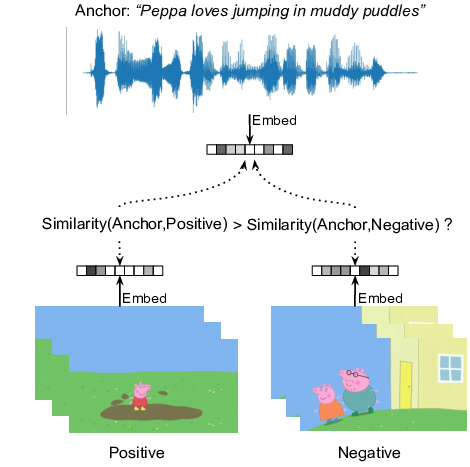

In our lab, we work on enabling machines to access rich data in multiple modalities, and to find systematic connections between them as a way to learn to understand language in a more natural and data-efficient manner. Our approach will help us explore the limits of human-like learning, and, if successful, will enable computers to deal not only with the world's largest languages, but also with those with little written material, or with no writing system at all.

Projects

- Understanding visually grounded spoken language via multi-tasking

- Indeep: Interpreting Deep Learning Models for Text and Sound

Partners

- E-Science Center

- KPN

- Textkernel

- Deloitte

- AIgent

- Chordify

- Global textware

- TNO

- FloodTags

- Waag

- muZIEum